IBM released the personal computer. IBM PC type personal computer

Typically, IBM PC personal computers consist of the following parts (blocks):

- system unit(in vertical or horizontal version);

- monitor(display) for displaying text and graphic information;

- keyboards, which allows you to enter various characters into the computer.

The most important unit in a computer is the system unit; it contains all the main components of the computer. The PC system unit contains a number of basic technical devices, the main ones of which are: a microprocessor, random access memory, read only memory, power supply and input/output ports, drives.

In addition, the following devices can be connected to the PC system unit:

- Printer for printing text and graphic information;

- mouse-type manipulator- a device that controls the graphic cursor

- joystick, used primarily in computer games;

- plotter or plotter- a device for printing drawings on paper;

- scanner- a device for reading graphic and text information;

- CD-ROM- CD reader, used to play moving images, text and sound;

- modem- a device for exchanging information with other computers via the telephone network;

- streamer- a device for storing data on magnetic tape;

- network adapter- a device that allows a computer to work on a local network.

The main components of a personal computer are the following devices: processor, memory (RAM and external), devices for connecting terminals and data transmission. Here is a description of the various devices included in the computer or connected to it.

Microprocessor

A microprocessor is a large integrated circuit (LSI) made on a single chip, which is an element for creating computers of various types and purposes. It can be programmed to perform an arbitrary logical function, meaning that by changing programs, the microprocessor can be forced to be part of an arithmetic unit or to control input/output. Memory and input/output devices can be connected to the microprocessor.

IBM PC computers use Intel microprocessors, as well as compatible microprocessors from other companies.

Microprocessors differ from each other in type (model) and clock frequency (the speed of performing elementary operations, given in megahertz - MHz). The most common models from Intel are: 8088, 80286, 80386SX, 80386DX, 80486, Pentium and Pentium-Pro, Pentium-II, Pentium-III, they are listed in order of increasing performance and price. Identical models can have different clock speeds - the higher the clock speed, the higher the performance and price.

The main Intel 8088, 80286, 80386 microprocessors released earlier do not contain special commands for processing floating point numbers, therefore, to increase their performance, so-called mathematical coprocessors can be installed that increase performance when processing floating point numbers.

Memory

Random access memory or random access memory (RAM), as well as read-only memory (ROM), form the internal memory of the computer, to which the microprocessor has direct access during its operation. Any information during processing is first rewritten by the computer from external memory (from magnetic disks) into RAM. The OP contains data and programs being processed at the current moment of computer operation. Information in the OP is received (copied) from external memory and, after processing, written there again. Information in the OP is contained only during a work session and is irretrievably lost when the PC is turned off or there is an emergency power failure. In this regard, the user must regularly write information that is subject to long-term storage from the OP onto magnetic disks during operation in order to avoid its loss.

The larger the RAM volume, the higher the computing power of the computer. As you know, to determine the amount of information, a unit of measurement is used: 1 byte, which is a combination of eight bits (zeros and ones). In these units of measurement, the amount of information stored in the OP or on a floppy disk can be written as 360kb, 720kb or 1.2Mb. Here 1Kb = 1024 bytes, and 1MB (1 megabyte is 1,024Kb, while a hard drive can accommodate 500MB, 1000MB or more.

For IBM PC XT volume OH. as a rule, it is 640kb, for IBM PC AT - more than 1 MB, for older IBM PC models - from 1 to 8 MB, but it can be 16, 32 MB and even more - the memory can be expanded by adding microcircuits on the main board of the computer.

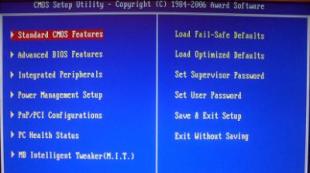

Unlike OP, ROM constantly stores the same information, and the user cannot change it, although he has the ability to read it. Typically, the ROM volume is small and ranges from 32 to 64 KB. ROM stores various programs that are written at the factory and are primarily intended to initialize the computer when it is turned on.

1 MB of RAM usually consists of two parts: the first 640 KB can be used by the application program and the operating system (OS). The rest of the memory is used for service purposes:

- for storing a part of the OS that provides testing of the computer, initial loading of the OS, as well as performing basic low-level input/output services;

- to transfer images to the screen;

- for storing various OS extensions that appear along with additional computer devices.

As a rule, when talking about the amount of memory (RAM), they mean the first part of it, and it is sometimes insufficient to run some programs.

This problem is resolved using extended and expanded memory.

Intel microprocessors 80286, 80386SX and 80486SX can handle larger RAM sizes - 16 MB, and 80386 and 80486 - 4 GB, but MS DOS cannot directly work with RAM larger than 640 KB. To access the additional OP, special programs (drivers) have been developed that allow receiving a request from the application program and switching to the “protected mode” of the microprocessor. Having completed the request, the drivers switch to the normal operating mode of the microprocessor.

Cash

Cache is a special high-speed processor memory. It is used as a buffer to speed up the work of the processor with the OP. In addition to the processor, the PC contains:

- electronic circuits (controllers) that control the operation of various devices included in the computer (monitor, drives, etc.);

- input and output ports through which the processor exchanges data with external devices. There are specialized ports through which data is exchanged with the internal devices of the computer, and general-purpose ports to which various additional external devices (printer, mouse, etc.) can be connected.

General purpose ports come in two types: parallel, designated LPT1 - LPT9, and asynchronous serial, designated COM1 - COM4. Parallel ports perform input and output faster than serial ports, but also require more wires for data exchange (the port for the domain with the printer is parallel, and the port for exchange with the modem via the telephone network is serial).

Graphics adapters

A monitor or display is a mandatory peripheral device of a PC and is used to display processed information from the computer's RAM.

Based on the number of colors used when presenting information on the screen, displays are divided into monochrome and color, and based on the type of information displayed on the screen - into symbolic (only symbolic information is displayed) and graphic (both symbolic and graphic information is displayed). A video computer consists of two parts: a monitor and an adapter. We only see the monitor, the adapter is hidden in the body of the machine. The monitor itself contains only a cathode ray tube. The adapter contains logic circuits that output a video signal. The electron beam travels through the screen in about 1/50th of a second, but the image changes quite rarely. Therefore, the video signal entering the screen must again generate (regenerate) the same image. To store it, the adapter has video memory.

In character mode, the display screen, as a rule, simultaneously displays 25 lines of 80 characters per line (a total of 2000 characters - the number of characters on a standard typewritten sheet), and in graphic mode, the screen resolution is determined by the characteristics of the monitor adapter board - the device for connecting it to the system unit .

The quality of the image on the monitor screen depends on the type of graphics adapter used.

The most widely used adapters are the following types: EGA, VGA and SVGA. Currently, VGA and SVGA (SuperVGA) are quite widely used. SVGA has a very high resolution. Previously, a CGA adapter was used, but it is no longer used on modern computers.

Adapters vary" resolution" (for graphic modes). Resolution is measured by the number of lines and the number of elements per line ("pixel"), in other words, dots per line. For example, a monitor with a resolution of 720x348 displays vertical 348 lines of dots, 720 dots per line. For Publishing systems use monitors with resolutions of 800x600 and 1024x768.Such monitors are very expensive.

Screens come in standard size (14 inches), enlarged (15 inches) and large like a TV (17, 20 and even 21 inches - i.e. 54 cm diagonally), color (from 16 to several tens of millions of colors) and monochrome.

The monitor adapter standard also determines the number of colors in the palette of color monitors: CGA in graphic mode has 4 colors, EGA has 64 colors, VGA has up to 256 colors, and SVGA has more than a million colors. In text mode, all of the listed standards allow you to reproduce 16 colors.

The choice of one type of monitor or another depends on the type of problem being solved on a PC. For example, if the user processes only textual information, then a monochrome character monitor will be sufficient for him, but if he solves problems (computer-aided design), then he needs a color graphic monitor. However, for most applications, color graphic monitors and adapters are preferable.

Disk drives

Information storage devices - an integral part of any computer - are often called external storage media or external computer memory. They are designed for long-term storage of voluminous information, while their contents do not depend on the current state of the PC. Any data and programs are stored on external media, so a library of user data is formed and stored here.

Information storage devices in personal computers are magnetic disk drives(NMD), in which direct access to information is organized. Recently, for PCs there have appeared magnetic tape drives- streamers that can contain very large amounts of information, but at the same time organize only sequential access to it. However, streamers do not replace magnetic disk drives, but only complement them. There are enough NMDs: floppy magnetic disk drives (FMD) and hard magnetic disk drives (HDD).

Hard disk drives are designed for permanent storage of information. On an IBM PC with an 80286 microprocessor, the hard disk capacity is usually from 20 to 40 MB, with 80386 SX, DX and 80486SX - up to 300 MB, with 804S6DX up to 500-600 MB, with PENTIUM - more than 2 GB.

The hard drive is a non-removable magnetic disk that is protected by a hermetically sealed case and is located inside the system unit. It may consist of several disks having two magnetic surfaces combined into one package.

A hard drive, unlike a floppy disk, allows you to store large amounts of information, which provides greater opportunities for the user.

When working with a hard disk drive, the user must know how much memory is occupied by data and programs stored on disks, how much free memory is available, control the filling of memory and rationally place information in it. The most common floppy disk sizes are 5.25 and 3.5 inches.

Floppy disk drives (FHD) allow you to transfer information from one computer to another, store information that is not constantly used on the computer, and make archival copies of information stored on the hard drive. A floppy disk (floppy disk) is a thin disk made of a special material with a magnetic coating applied to its surface. On the plastic body of the floppy disk there is a rectangular slot for recording protection, a hole for contact of the magnetic disk with the read heads of the disk drive, and a label with the parameters of the floppy disk.

The main parameter of a floppy disk is its diameter. Currently, there are two main standards for floppy disk drives - floppy disks with a diameter of 3.5 and 5.25 inches (89 and 133 mm, respectively). As a rule, IBM PC XT and IBM PC AT mainly use floppy disks with a diameter of 5.25 inches, and older IBM PC models use floppy disks with a diameter of 3.5 inches.

To write and read information, the floppy disk is installed in the drive slot, which is located in the system unit. A PC may have one or two disk drives. Since a floppy disk is a removable device, it is used not only to store information, but also to transfer information from one PC to another.

5.25-inch floppy disks, depending on the quality of production, can contain information of 360, 720 KB or 1.2 MB.

You can determine the maximum capacity of 3.5-inch floppy disks by their appearance: floppy disks with a capacity of 1.44 MB have a special slot in the lower right corner, but floppy disks with a capacity of 720 KB do not. These floppy disks are enclosed in a hard plastic case, which significantly increases their reliability and durability. In this regard, on new computers, 3.5-inch floppy disks are replacing 5.25-inch floppy disks.

Write protection of floppy disks. 5.25" floppy disks have a write-protection slot. If this slot is sealed, it will be impossible to write to the floppy disk. On 3.5-inch floppy disks, there are write-protection slots and a special switch - a latch that allows or prohibits writing to the floppy disk. Recording permission mode - the hole is closed, if the hole is open, then recording is prohibited.

Initializing (formatting) floppy disks. Before using it for the first time, the floppy disk must be initialized (marked) in a special way.

In addition to conventional disk drives, modern computers have special disk drives for laser compact discs (CD-ROM), as well as for magnetic-optical disks and Bernoulli disks.

CD-ROM - compact discs, many large software packages for modern computers are produced on such disks. CD - ROM drives differ in information transfer speed - regular, double, quadruple, etc. speed. Modern 24 - 36 - speed disk drives operate almost at the speed of a hard drive.

A typical CD has a capacity of more than 600 MB or 600 million characters, but it is intended only for playback and does not allow recording. Rewritable CDs and corresponding drives are already available, but they are very expensive. Currently, sets of excellent quality photographs, discs with video clips and films are sold on CDs. Sets of games with a variety of music and sound effects, computer encyclopedias, educational programs - all of this is released only on CD.

Printers and plotters

A printer (printing device) is designed to output text and graphic information from the computer's RAM onto paper, and the paper can be either sheet or roll.

The main advantage of printers is the ability to use a large number of fonts, which allows you to create quite complex documents. Fonts differ in the width and height of letters, their inclination, and the distances between letters and lines.

To work with the printer, the user must select the font he needs and set print parameters to match the width of the output document and the size of the paper used. Based on this, for example, dot matrix printers have two modifications: printers with a narrow carriage (the width of a standard typewritten sheet) and printers with a wide carriage (the width of a standard typewritten sheet).

It must be remembered that the size of the “computer sheet” (the space allocated by the PC to the user for filling with symbolic information) significantly exceeds the size of the monitor screen and amounts to hundreds of columns and thousands of lines, which is determined by the amount of free RAM on the computer and the software used. When outputting information to a printer, the contents of the entire computer sheet are printed, and not just the part visible on the monitor screen. Therefore, it is first necessary to divide the text prepared for printing into pages, setting the required text width based on the type of font and the width of the paper.

Printers can output graphic information and even in color. There are hundreds of printer models. They can be of the following types: matrix, inkjet, lettered, laser.

Until recently, the most commonly used printers were dot matrix printers, the print head of which contains a vertical row of thin metal rods (needles). The head moves along the printed line, and the rods strike the paper at the right moment through the ink ribbon. This ensures the formation of an image on paper. Cheap printers use 9 pin heads and the print quality is quite mediocre, which can be improved with a few passes. Printers with 24 or 48 cores have higher quality and sufficient printing speed. Printing speed - from 10 to 60 seconds per page. When choosing a printer, people are usually interested in the ability to print Russian and Kazakh letters. In this case it is possible:

- fonts of Kazakh and Russian letters can be built into the printer. In this case, after turning on the printer is immediately ready to print texts in Kazakh and Russian. If the codes of Kazakh and Russian letters are the same as in the computer, then the texts can be printed using the DOS PRINT or COPY commands. If the codes do not match, then you have to use transcoding drivers.

- fonts of Kazakh and Russian letters are missing in the printer ROM. Then, before printing texts, you need to download the letter font loading driver. When the printer is turned off, they disappear from memory.

Dot matrix printers easy to operate, have the lowest cost, but rather low productivity and print quality, especially when outputting graphic data.

Inkjet printers The image is formed by micro drops of special ink. They are more expensive than dot matrix printers and require careful maintenance. They operate silently, have a lot of built-in fonts, but are very sensitive to paper quality - The quality and productivity of inkjet printers is higher than that of dot matrix printers. Some of the disadvantages are: fairly high ink consumption and moisture instability of printed documents.

Laser printers provide the best print quality, using the principle of xerography - the image is transferred to paper from a special drum to which ink particles are electrically attracted. The difference from a xerographic machine is that the printing drum is electrified using a laser beam according to commands from the machine. The resolution of these printers is from 300 to 1200 dpi. Printing speed is from 3 to 15 seconds per page when outputting text. Laser printers offer the best print quality and performance, but are the most expensive of the printer types reviewed.

Plotter(plotter) also serves to display information on paper and is mainly used to display graphic information. Plot plotters are widely used in design automation, when it is necessary to obtain drawings of products being developed. Plotters are divided into single-color and color, and also according to the quality of information output when printed.

Computer input devices

Keyboard - The main device for entering information into the computer is still the keyboard; you can use it to enter text information and give commands to the computer. We will learn more about the keyboard functionality in the next lesson.

Mouse together with the keyboard is intended to control the computer. This is a separate small device with two or three buttons, which the user moves along the horizontal surface of the desktop, pressing the appropriate keys if necessary to perform certain operations.

Scanner allows you to enter any type of information into a computer from a sheet of paper, and the entry procedure is simple, convenient and quite fast.

Additional devices

Modems(modulator-demodulator) are used to transfer data between computers and they differ mainly in the speed of information transfer. Modem speeds today vary from 2400 bits/sec to 25,000 thousand bits/sec. They support certain standards of data exchange procedures (protocols). When connecting to some kind of computer network (InterNet, Relcom, FidoNet, etc.) or to use email, a modem is the most necessary device.

There are also fax modems that combine the functions of a modem with a fax machine. Using a fax modem, you can send text information not only to your subscriber’s computer, but also to a simple fax machine and, accordingly, receive it. Fax modems are somewhat more expensive than modems, but their capabilities are wider.

Nowadays they often talk about the multimedia capabilities of computers. Multimedia is a modern method of displaying information based on the use of text, graphic and sound capabilities of a computer, i.e. it is the combined use of image, sound, text, music and animation to better display data on the screen. A computer with such capabilities must have a sound card and a CD-ROM drive that can reproduce colors, soundtracks and videos from a regular CD. Multimedia computers may also contain a special video card for connecting a video camera, VCR and television signal receiving device.

Control questions

1. List the main PC components and additional devices.

2. What printers are used when running a PC?

3. What video adapters do you know? What is the difference between a display and a video adapter?

4. What floppy disks are used on your computer?

5. What is a modem and what is it used for?

The world's first microprocessor appeared in 1971. It was a four-bit Intel 4004 microprocessor. Then, in 1973, the eight-bit Intel 8080 was released. The very first microcomputers were created on the basis of this processor. These machines had very little capabilities and were simply viewed as fun, but of little use, toys. In 1979, the first sixteen-bit microprocessors Intel 8086 and Intel 8088 were released. Based on the Intel 8086, IBM released a personal computer in 1981 IBM PC(PC - Personal Computer - personal computer), in its capabilities already close to the mini-computers that existed then. Very quickly, these computers gained immense popularity all over the world due to their low cost and ease of use. A little later the personal computer appeared IBM PC/XT(XT - extended Technology - extended technology) with the maximum possible amount of RAM up to 1 MB. The next major step in the development of microprocessor technology was the release of personal computers in 1983 IBM PC/AT(AT - Advanced Technology - advanced technology) based on the Intel 80286 microprocessor with the maximum possible amount of RAM expanded to 16 MB. And by the end of the 80s, thirty-two-bit Intel 80386 with the maximum possible memory capacity of 4 GB were released. In the early nineties, a more powerful thirty-two-bit microprocessor Intel 80486 appeared, which combined more than a million transistor elements on one chip. The Intel family continues to develop, and in 1994 personal computers based on a microprocessor called Pentium, which during development was labeled as Intel 80586. Currently, several models with the Pentium brand are already in use - Pentium II, Pentium MMX (with advanced multimedia capabilities), Pentium III and Pentium IV. Each subsequent model differs from the previous one by expanding the instruction system, increasing clock speed, possible amounts of RAM and hard drives, and increasing overall efficiency. New, more advanced models are constantly being developed.

Computers of the IBM PC family turned out to be so successful that they began to be duplicated in almost all countries of the world. At the same time, the computers turned out to be the same in terms of data encoding methods and command systems, but different in technical characteristics, appearance and cost. Such machines are called IBM-compatible personal computers. Programs written to run on an IBM PC can run just as well on IBM-compatible computers. In such cases it is said that there is software compatibility.

Other architectures

The machines of the IBM PC family belong to the so-called CISC-computer architecture (CISC - Complete Instruction Set Computer - a computer with a complete set of commands). In the instruction systems of processors built according to this architecture, a separate instruction is provided for each possible action. For example, the instruction set of the Intel Pentium processor consists of more than 1000 different instructions. The wider the instruction set, the more bits of memory are required to encode each individual instruction. If, for example, the instruction system consists of only four actions, then only two bits of memory are required to encode them, eight possible actions require three bits of memory, sixteen require four, etc. Thus, expanding the instruction system entails an increase the number of bytes allocated for one machine instruction, and therefore the amount of memory required to record the entire program as a whole. In addition, the average execution time of one machine instruction increases, and therefore the average execution time of the entire program.

In the mid-80s, the first processors with a reduced instruction set appeared, built according to the so-called RISC-architecture (RISC - Reduce Instruction Set Computer - a computer with a truncated instruction system). The instruction systems of processors with this architecture are much more compact, so programs consisting of instructions included in this system require significantly less memory and execute faster. However, for many complex actions, separate commands are not provided in such systems. When such actions become necessary, they emulated using existing commands Generally speaking, emulation is the execution of actions of one device using the means of another, carried out without loss of functionality. In this case, we are talking about performing the necessary complex actions, for which the commands in the truncated system not provided using a certain sequence of commands available in the system. Naturally, there is a certain loss of processor efficiency.

The company's well-known machines belong to the RISC architecture Apple Macintosh, which have a command system that in some cases provides them with higher performance compared to machines of the IBM PC family. Another important difference between these machines is that many of the capabilities that are provided in the IBM PC family by purchasing, installing and configuring additional hardware are built-in in the Macintosh family of machines and do not require any hardware configuration. True, Macintosh machines are more expensive than machines of the IBM family with similar parameters.

Machines from the families of Sun Microsystems, Hewlett Packard and Compaq, which also belong to the RISC architecture. As representatives of other architectures, we can also mention families of portable computers of the classes Notebook(portable) and Handheld(manual), which are small in size, light in weight and self-powered. These qualities make it possible to use the mentioned machines on business trips, at business meetings, scientific conferences, etc., in short, in cases where access to permanently installed computers is limited or impossible, for example, on a train or plane.

Control questions

1. Define the concept of “computer architecture”.

2. Name the three main groups of computer devices.

3. What is a number system and what number systems are used in personal computers to encode information?

4. What are the differences and similarities between a bit and a byte?

5. How is text information encoded in a PC?

6. How is graphic information encoded in a PC?

7. Define the concepts “pixel”, “raster”, “resolution”, “scanning”.

8. What is memory capacity, in what units is it measured?

9. How are RAM and external memory similar and different from each other?

10. Define the concepts of “loading” and “starting” a program.

11. Describe floppy disk drives.

13. Describe the basic rules for handling floppy disks.

14. Define the concepts “working surface”, “track”, “sector”, “cluster”.

15. How to determine the volume of disk storage media?

16. Why do you need to format magnetic disks?

17. Describe hard disk drives.

18. Describe optical and magneto-optical disk drives.

19. Compare floppy, hard magnetic disks, optical and magneto-optical disks.

20. How many disk devices can there be in personal computers? How are they designated?

21. Describe the main functions of a processor.

22. Define the concepts “command system”, “machine command”, “machine program”.

23. Indicate the main technical characteristics of processors.

24. What is a translator and why is it needed?

25. What is a tire needed for? What is determined by its capacity?

26. What is a motherboard?

27. What computer devices are located in the system unit?

28. Give a classification of displays and indicate their basic models.

29. What are adapters used for?

30. Name the main operating modes of the keyboard.

30. What are the function keys for?

31. What is a keyboard shortcut?

32. What is a text cursor?

33. Explain how text scrolls.

34. What is a text screen?

35. Describe the basic ways to move a text cursor.

36. What is a mouse for?

37. Indicate the main parameters and types of printers.

38. What is a scanner used for? What other devices with similar purposes do you know?

39. What devices must be included in a computer so that it can work in a multimedia environment?

40. What are modems used for?

41. What is a computer family?

42. Which computers are considered software compatible?

43. Name the basic models of the IBM PC family. How are they different from each other?

Computers

system unit;

keyboards

monitor

electronic circuits

power unit

drives

hard drive

Basic peripheral devices of personal computers.

Additional devices

You can connect various input/output devices to the system unit of an IBM PC computer, thereby expanding its functionality. Many devices are connected through special sockets (connectors), usually located on the back wall of the computer system unit. In addition to the monitor and keyboard, such devices are:

Printer- for printing text and graphic information;

mouse- a device that facilitates entering information into a computer;

joystick- a manipulator in the form of a hinged handle with a button, used mainly for computer games;

As well as other devices.

These devices are connected using special wires (cables). To protect against errors (“foolproof”), the connectors for inserting these cables are made different, so that the cable simply will not be plugged into the wrong socket.

Some devices can be inserted inside the computer system unit, for example:

modem- to exchange information with other computers through the telephone network;

fax modem- combines modem and telefax capabilities;

streamer- for storing data on magnetic tape.

Some devices, for example, many types of scanners (devices for entering pictures and texts into a computer), use a mixed connection method: only an electronic board (controller) that controls the operation of the device is inserted into the computer system unit, and the device itself is connected to this board with a cable.

Main classes of personal computer software and their purpose. The concept of installing and uninstalling programs.

Programs running on a computer can be divided into three categories:

applied programs, directly ensuring the performance of work required by users: editing texts, drawing pictures, processing information arrays, etc.;

systemic programs, performing various auxiliary functions, such as creating copies of used information, issuing help information about the computer, checking the functionality of computer devices, etc.;

instrumental systems(programming systems) that ensure the creation of new computer programs.

It is clear that the boundaries between these three classes of programs are very arbitrary; for example, a system program may include a text editor, i.e. applied program.

Installation of programs– installing the program on a PC. In this case, information about the program is often written to the PC registry.

Uninstalling programs– the reverse procedure of installation, i.e. Removing the program from the PC.

Drivers. An important class of system programs are driver programs. They expand DOS's ability to manage computer input/output devices (keyboard, hard drive, mouse, etc.), RAM, etc. Using drivers, you can connect new devices to your computer or use existing devices in non-standard ways.

Purpose and main functions of the Total Commander program.

The Total Commander file manager provides another way to work with files and folders in the Windows environment. The program, in a simple and visual form, allows you to perform such operations with the file system as moving from one directory to another, creating, renaming, copying, moving, searching, viewing and deleting files and directories, and much more.

Total Commander is not a standard Windows program, i.e. is not installed on the computer along with the installation of Windows itself. The Total Commander program is installed separately, after installing Windows.

The working area of the Total Commander program window differs from many others in that it is divided into two parts (panels), each of which can display the contents of various disks and directories.

For example, a user can display the contents of the D: drive in the left pane, and enter one of the directories on the C: drive in the right pane. Thus, it becomes possible to simultaneously work with files and folders in both parts of the window.

Working with files and folders in Total Commander:

· Moving from directory to directory

· Selecting files and directories

· Copying files and directories

· Moving files and directories

· Directory creation

· Deleting files and directories

· Renaming files and directories

· Quick directory search

The concept of archiving and unarchiving files. Basic techniques for working with the ARJ archiver program.

As a rule, programs for packaging (archiving) files allow you to place copies of files on disk in a compressed form into an archive file (archiving), extract files from the archive (unarchive), view the table of contents of the archive, etc. Different programs differ in the format of archive files, speed of operation, degree of compression of files when archived, and ease of use.

Setting ARJ program functions carried out by specifying the command code and modes. The command code is a single letter, it is indicated on the command line immediately after the program name and specifies the type of activity that the program must perform. For example, A - adding files to the archive, T - testing (checking) the archive, E - extracting files from the archive, etc.

To clarify exactly what actions are required from the ARJ program, you can set modes. Modes can be specified anywhere on the command line after the command code; they are specified either preceded by a “-” character: -V, -M, etc., or preceded by a “/” character: /V, /M, etc. . (however, these two methods cannot be mixed on the same command line).

Modes for selecting archived files. The ARJ program has three main modes for storing files in an archive:

Add - adding all files to the archive;

Update - adding new files to the archive;

Freshen - adding new versions of files existing in the archive.

Extracting files from the archive. The ARJ program itself extracts files from its archives. Call format: command mode archive name (directory\) (file names).

Network structure

The nodes and backbones of the Internet are its infrastructure, and on the Internet there are several services (E-mail, USENET, TELNET, WWW, FTP, etc.), one of the first services is E-mail. Currently, most of the traffic on the Internet comes from the World Wide Web service.

The principle of operation of the WWW service was developed by physicists Tim Bernes-Lee and Robert Caillot at the European research center CERN (Geneva) in 1989. Currently, the Internet Web service contains millions of pages of information with various types of documents.

The components of the Internet structure are combined into a common hierarchy. The Internet brings together many different computer networks and individual computers that exchange information with each other. All information on the Internet is stored on Web servers. Information exchange between Web servers is carried out via high-speed highways.

Such highways include: dedicated telephone analog and digital lines, optical communication channels and radio channels, including satellite communication lines. Servers connected by high-speed highways make up the basic part of the Internet.

Users connect to the network through routers from local Internet service providers or service providers (ISPs), which have persistent Internet connections through regional providers. A regional provider connects to a larger national provider that has nodes in various cities of the country.

Networks of national providers are combined into networks of transnational providers or first-tier providers. United networks of first-tier providers make up the global Internet network.

Searching for information on the Internet

The main task of the Internet is to provide the necessary information. The Internet is an information space in which you can find the answer to almost any question that interests the user. This is a huge global network into which streams of smaller networks flow like streams of information. Any user with a PC and the appropriate programs will be able to connect to the network, using its capabilities for a variety of purposes - spending leisure time, studying, reading scientific papers, sending email, etc.

Basic methods of searching for information on the Internet:

1. Direct search using hypertext links.

Since all sites in the WWW space are actually connected to each other, searching for information can be done by sequentially viewing related pages using a browser. Although this completely manual search method seems completely anachronistic on a Web containing more than 60 million nodes, “manual” browsing of Web pages is often the only option in the final stages of information search, when mechanical “digging” gives way to deeper analysis. The use of catalogs, classified and thematic lists and all kinds of small directories also applies to this type of search.

2. Use of search engines. Today, this method is one of the main and, in fact, the only method when conducting a preliminary search. The result of the latter may be a list of Network resources that are subject to detailed consideration.

Typically, the use of search engines is based on the use of keywords that are passed to search servers as search arguments: what to look for. If done correctly, generating a list of keywords requires preliminary work on compiling a thesaurus.

3. Search using special tools. This fully automated method can be very effective for conducting initial searches. One of the technologies of this method is based on the use of specialized programs - spiders, which automatically scan Web pages, looking for the required information on them. In fact, this is an automated version of browsing using hypertext links, described above (search engines use similar methods to build their index tables). Needless to say, automatic search results necessarily require further processing.

The use of this method is advisable if the use of search engines cannot provide the necessary results (for example, due to the non-standard nature of the query, which cannot be adequately specified by existing search engine tools). In some cases, this method can be very effective. The choice between using a spider or search servers is a variant of the classic choice between using universal or specialized tools.

4. Analysis of new resources. Searching newly created resources may be necessary when conducting repeated search cycles, searching for the latest information, or to analyze trends in the development of the research object over time. Another possible reason may be that most search engines update their indexes with a significant delay caused by the gigantic volumes of processed data , and this delay is usually greater the less popular the topic of interest. This consideration can be very significant when conducting a search in a highly specialized subject area.

Basic concepts of ET

The working window of Microsoft Excel spreadsheets contains the following controls: title bar, menu bar, toolbars, formula bar, work field, status bar.

The Excel document is called workbook. A workbook is a collection of worksheets. The document window in Excel displays the current worksheet. Each worksheet has a title, which appears on the worksheet label.

Interface structure

After launching Microsoft Excel, its window will appear on the screen.

working window of the program:

The title bar, which includes: the system menu, the title itself, and the window control buttons.

Menu bar.

Toolbars: formatting and standard

· Status bar.

· Formula bar, which includes: name field; enter, cancel and function wizard buttons; and a function line.

Context menu

In addition to the main menu, which is constantly on the screen in all Windows applications, Excel, like other MS Office programs, actively uses the context menu. The context menu provides quick access to frequently used commands for a given object in a given situation.

When you right-click on an icon, a cell, a selected group of cells, or an embedded object, a menu with basic functions opens near the mouse pointer. The commands included in the context menu always refer to the active (selected) object.

Toolbars

Ways to show/hide toolbars:

First way:

1.Click on any toolbar with the Right Mouse Button ( PKM). A context menu for the list of toolbars will appear.

2.Set or uncheck the box next to the name of the required toolbar by clicking on the name of the required toolbar in the list.

Second way:

1.Select the command in the menu bar View. The View command menu appears.

2.Move the cursor to a line Toolbars. The Toolbar command menu appears.

3.Select or clear the checkbox next to the name of the desired toolbar.

Formula bar

The formula bar is used to enter and edit values or formulas in cells or charts, and to display the address of the current cell.

Workbook, sheet

A workbook is a document containing several sheets, which may include tables, charts, or macros. All worksheets are saved in one file.

Cell block

As A block of cells can be considered a row or part of a row, a column or part of a column, as well as a rectangle consisting of several rows and columns or parts thereof. The address of a block of cells is specified by indicating references to its first and last cells, between which a separating character is placed - a colon (for example, B1: D6).

Data types in MS Excel

There are two types of data that can be entered into Excel worksheet cells - constants and formulas.

Constants in turn are divided into: numeric values, text values, date and time values, Boolean values, and error values.

Numeric values

Numeric values can contain numbers from 0 to 9, as well as special characters: + - E e () . , $% /

To enter a numerical value in a cell, select the desired cell and enter the required combination of numbers from the keyboard. The numbers you enter appear both in the cell and in the formula bar. When you have finished entering, you must press the Enter key. After this, the number will be written into the cell. By default, after pressing Enter, the cell located one line below becomes active, but using the "Tools" - "Options" command, on the "Edit" tab, you can set the required direction of transition to the next cell after entering, or even exclude the transition altogether. If, after entering a number, you press any of the cell navigation keys (Tab, Shift+Tab…), the number will be fixed in the cell, and the input focus will move to the adjacent cell.

Sometimes you need to enter long numbers. At the same time, scientific notation with no more than 15 significant digits is used to display it in the formula bar. The precision of the value is chosen such that the number can be displayed in the cell.

In this case, the value in the cell is called the input or display value.

The value in the formula bar is called the stored value.

The number of digits you enter depends on the column width. If the width is not sufficient, Excel either rounds the value or displays ### characters. In this case, you can try increasing the cell size.

Text values

Entering text is completely similar to entering numeric values. You can enter almost any characters. If the length of the text exceeds the width of the cell, then the text overlaps the adjacent cell, although it is actually in the same cell. If there is also text in an adjacent cell, then it overlaps the text in the adjacent cell.

To adjust the cell width to fit the longest text, click on the column border in its header. So if you click on the line between the headings of columns A and B, the cell width will be automatically adjusted to the longest value in that column.

If there is a need to enter a number as a text value, then you must put an apostrophe in front of the number, or enclose the number in quotation marks - "123 "123".

You can tell which value (numeric or text) is entered into a cell by its alignment. By default, text is left aligned while numbers are right aligned.

When entering values into a range of cells, input will occur from left to right and top to bottom. Those. entering values and completing the input by pressing Enter, the cursor will move to the adjacent cell located on the right, and when it reaches the end of the block of cells in the line, it will move one line below to the leftmost cell.

Changing values in a cell

To change the values in a cell before fixing the input, you must use, as in any text editor, the Del and Backspace keys. If you need to change an already fixed cell, then double-click on the desired cell, and the cursor will appear in the cell. After this, you can edit the data in the cell. You can simply select the desired cell, and then place the cursor in the formula bar, where the contents of the cell are displayed, and then edit the data. After finishing editing, you must press Enter to commit the changes. In case of erroneous editing, the situation can be "rewound" back using the "Undo" button (Ctrl + Z).

26. Creating charts in MS Excel.

To create a chart, you must first enter the data for the chart into an Excel sheet. Select your data and then use the Chart Wizard to step through the process of choosing a chart type and various chart options for your chart. In Chart Wizard - Step 1 of 4: Chart Type dialog box, specify the chart type you want to use for the chart. In Chart Wizard - Step 2 of 4- Chart data source dialog box, you can specify the data range and how the series are displayed on the chart. IN Chart Wizard - Step 3 of 4: Chart Options dialog box, you can change the appearance of the chart more by selecting the chart options on the six tabs. To change these settings, view the sample chart to make sure the chart looks the way it should . In Chart Wizard - Step 4 of 4: Chart Placement dialog box, select a folder to place on the chart by doing one of the following:

Click the On New Sheet button to display the chart on a new sheet.

Click the As object in button to display the chart as an object in sheet.

Click Finish.

Go to top of page

MS PowerPoint. Presentation program capabilities. Basic concepts.

PowerPoint XP is an application for preparing presentations, the slides of which are made available to the public in the form of printed graphic materials or through the demonstration of an electronic slide film. After creating or importing the content of the report, you can quickly decorate it with drawings, supplement it with diagrams and animation effects. Navigation elements make it possible to generate interactive presentations controlled by the viewer.

PowerPoint files are called presentations and their elements are slides.

DESIGN TEMPLATES

Microsoft PowerPoint allows you to create design templates,

which can be used in a presentation to give it a finished, professional look.

Design template – This is a template whose format can be used to prepare other presentations.

Purpose and characteristics of the main devices of a personal computer such as IBM PC.

Computers- These are the tools used to process information. IBM PC Basic Blocks

Typically, IBM PC personal computers consist of three parts (blocks):

system unit;

keyboards, which allows you to enter characters into the computer;

monitor(or display) - for displaying text and graphic information.

Although the system unit looks the least impressive of these parts of the computer, it is the “main” part of the computer. It contains all the main components of the computer:

electronic circuits devices that control the operation of the computer (microprocessor, RAM, device controllers, etc., see below);

power unit, which converts the network power supply into low-voltage direct current supplied to the electronic circuits of the computer;

drives(or floppy disk drives) used for reading and writing floppy disks (floppy disks);

hard drive magnetic disk, designed for reading and writing to a non-removable hard magnetic disk (hard drive).

IBM is a well-known company today. She left a huge mark on computer history, and even today her pace in this difficult matter has not slowed down. The most interesting thing is that not everyone knows why IBM is so famous. Yes, everyone has heard about the IBM PC, that it made laptops, that it once seriously competed with Apple. However, the blue giant’s merits include a huge number of scientific discoveries, as well as the introduction of various inventions into everyday life. Sometimes many people wonder where this or that technology came from. And everything is from there - from IBM. Five Nobel laureates in physics received their prizes for inventions made within the walls of this company.

This material is intended to shed light on the history of the formation and development of IBM. At the same time, we will talk about its key inventions, as well as future developments.

Time of formation

IBM's origins go back to 1896, when, decades before the advent of the first electronic computers, the outstanding engineer and statistician Herman Hollerith founded a company for the production of tabulating machines, christened TMC (Tabulating Machine Company). Mr. Hollerith, a descendant of German emigrants who was openly proud of his roots, was prompted to do this by the success of his first self-produced calculating and analytical machines. The essence of the Blue Giant's grandfather's invention was that he developed an electrical switch that allowed data to be encoded in numbers. In this case, the information carriers were cards, in which holes were punched in a special order, after which the punched cards could be sorted mechanically. This development, patented by Herman Hollerith in 1889, created a real sensation, which allowed the 39-year-old inventor to receive an order to supply his unique machines to the US Department of Statistics, which was preparing for the 1890 census.

The success was stunning: processing the collected data took only one year, in contrast to the eight years it took statisticians from the US Census Bureau to obtain the results of the 1880 census. It was then that the advantage of computing mechanisms in solving such problems was demonstrated in practice, which largely predetermined the future “digital boom.” The funds earned and contacts established helped Mr. Hollerith create the TMC company in 1896. At first, the company tried to produce commercial machines, but on the eve of the 1900 census, it repurposed itself to produce counting and analytical machines for the US Census Bureau. However, three years later, when the state “feeding trough” was closed, Herman Hollerith again turned his attention to the commercial application of his developments.

Although the company was experiencing a period of rapid growth, the health of its founder and inspirer was steadily deteriorating. This forced him in 1911 to accept the offer of millionaire Charles Flint to buy TMC. The deal was valued at $2.3 million, of which Hollerith received $1.2 million. In fact, it was not about a simple purchase of shares, but about the merger of TMC with the companies ITRC (International Time Recording Company) and CSC (Computing Scale Corporation), as a result of which the CTR (Computing Tabulating Recording) corporation was born. It became the prototype of modern IBM. And if many people call Herman Hollerith the grandfather of the “blue giant,” then it is Charles Flint who is considered to be his father.

Mr. Flint was undeniably a financial genius with a knack for envisioning strong corporate alliances, many of which have outlived their founder and continue to play a decisive role in their fields. He took an active part in the creation of the Pan-American rubber manufacturer U.S. Rubber, one of the once leading world producers of chewing gum, American Chicle (since 2002, now called Adams, part of Cadbury Schweppes). For his success in consolidating the corporate power of the United States, he was called the "father of trusts." However, for the same reason, the assessment of its role, from the point of view of positive or negative impact, but never from the point of view of significance, is very ambiguous. How paradoxical it is that Charles Flint's organizational skills were highly valued in government departments, and he always found himself in places where ordinary officials could not act openly or their work was less effective. In particular, he is credited with participating in a secret project to buy ships around the world and convert them into warships during the Spanish-American War of 1898.

Created by Charles Flint in 1911, the CTR Corporation produced a wide range of unique equipment, including time tracking systems, scales, automatic meat cutters and, which turned out to be especially important for the creation of the computer, punched card equipment. In 1914, Thomas J. Watson Sr. took over as CEO, and in 1915 he became president of CTR.

The next major event in the history of CTR was the change of name to International Business Machines Co., Limited, or IBM for short. This happened in two stages. First, in 1917, the company entered the Canadian market under this brand. Apparently, by this she wanted to emphasize the fact that she is now a real international corporation. In 1924, the American division also became known as IBM.

During the Great Depression and World War II

The next 25 years in IBM's history were more or less stable. Even during the Great Depression in the United States, the company continued its activities at the same pace, practically without laying off employees, which could not be said about other companies.

During this period, several important events can be noted for IBM. In 1928 the company introduced a new type of punched card with 80 columns. It was called the IBM Card and was used for the last several decades by the company's calculating machines, and then by its computers. Another significant event for IBM at this time was a large government order to systematize job data for 26 million people. The company itself recalls it as “the largest settlement transaction of all time.” In addition, this opened the door for the blue giant to other government orders, just like at the very beginning of TMC.

Book "IBM and the Holocaust"

There are several references to IBM's collaboration with the fascist regime in Germany. The data source here is Edwin Black's book "IBM and the Holocaust." Its name clearly states for what purpose the blue giant's calculating machines were used. They kept statistics on Jewish prisoners. The codes that were used to organize the data are even given: Code 8 - Jews, Code 11 - Gypsies, Code 001 - Auschwitz, Code 001 - Buchenwald, and so on.

However, according to IBM management, the company only sold equipment to the Third Reich, and how it was used further does not concern them. By the way, many American companies did this. IBM even opened a plant in Berlin in 1933, that is, when Hitler came to power. However, there is a downside to the use of IBM equipment by the Nazis. After the defeat of Germany, thanks to the machines of the blue giant, it was possible to track the fates of many people. Although this did not stop various groups of people affected by the war and the Holocaust in particular from demanding an official apology from IBM. The company refused to bring them. Even despite the fact that during the war, its employees who remained in Germany continued their work, even communicating with the company’s management through Geneva. However, IBM itself disclaimed all responsibility for the activities of its enterprises in Germany during the war period from 1941 to 1945.

In the USA, during the war period, IBM worked for the government and not always in its direct line of business. Its production facilities and workers were busy producing rifles (particularly the Browning Automatic Rifle and M1 Carbine), bomb sights, engine parts, etc. Thomas Watson, still head of the company at the time, set a nominal profit on this product of 1%. And even this meager amount was sent not to the blue giant’s piggy bank, but to the founding of a fund to help widows and orphans who had lost their loved ones in the war.

Applications have also been found for calculating machines located in the States. They were used for various mathematical calculations, logistics and other war needs. They were no less actively used when working on the Manhattan Project, within the framework of which the atomic bomb was created.

Time for big mainframes

The beginning of the second half of the last century was of great importance for the modern world. Then the first digital computers began to appear. And IBM took an active part in their creation. The very first American programmable computer was the Mark I (full name Aiken-IBM Automatic Sequence Controlled Calculator Mark I). The most amazing thing is that it was based on the ideas of Charles Babbage, the inventor of the first computer. By the way, he never completed it. But in the 19th century this was difficult to do. IBM took advantage of his calculations, transferred them to the technologies of the time, and the Mark I was released. It was built in 1943, and a year later it was officially put into operation. The history of "Marks" did not last long. A total of four modifications were produced, the last of which, Mark IV, was introduced in 1952.

In the 50s, IBM received another large order from the government to develop computers for the SAGE (Semi Automatic Ground Environment) system. This is a military system designed to track and intercept potential enemy bombers. This project allowed the blue giant to gain access to research at the Massachusetts Institute of Technology. Then he worked on the first computer, which could easily serve as a prototype for modern systems. So it included a built-in screen, a magnetic memory array, supported digital-to-analog and analog-to-digital conversions, had some kind of computer network, could transmit digital data over a telephone line, and supported multiprocessing. In addition, it was possible to connect so-called “light guns” to it, which were previously widely used as an alternative to the joystick on consoles and slot machines. There was even support for the first algebraic computer language.

IBM built 56 computers for the SAGE project. Each cost $30 million at 1950s prices. 7,000 company employees worked on them, which at that time amounted to 20% of the company’s entire staff. In addition to big profits, the blue giant was able to gain invaluable experience, as well as access to military developments. Later, all this was used in the creation of computers of the next generations.

The next major event for IBM was the release of the System/360 computer. It is associated almost with the change of an entire era. Before him, the blue giant produced systems based on vacuum tubes. For example, following the aforementioned Mark I, the Selective Sequence Electronic Calculator (SSEC) was introduced in 1948, consisting of 21,400 relays and 12,500 vacuum tubes, capable of performing several thousand operations per second.

In addition to SAGE computers, IBM worked on other projects for the military. Thus, the Korean War required the use of faster means of calculation than a large programmable calculator. Thus, a fully electronic computer (not made from relays, but from lamps) IBM 701 was developed, which worked 25 times faster than SSEC, and at the same time took up four times less space. Over the next few years, the modernization of tube computers continued. For example, the IBM 650 machine became famous, of which about 2000 units were produced.

No less significant for today's computer technology was the invention in 1956 of a device called RAMAC 305. It became the prototype of what today is abbreviated HDD or simply hard drive. The first hard drive weighed about 900 kilograms, and its capacity was only 5 MB. The main innovation was the use of 50 aluminum round constantly rotating plates, on which the information carriers were magnetized elements. This made it possible to provide random access to files, which simultaneously and significantly increased the speed of data processing. But this pleasure was not cheap - it cost $50,000 at the prices of that time. Over 50 years, progress has reduced the cost of one megabyte of data on a HDD from $10,000 to $0.00013, based on the average cost of a 1 TB hard drive.

The middle of the last century was also marked by the arrival of transistors to replace lamps. The blue giant began its first attempts to use these elements in 1958 with the announcement of the IBM 7070 system. Somewhat later, computers models 1401 and 1620 appeared. The first was intended to perform various business tasks, and the second was a small scientific computer used to develop the design of highways and bridges. That is, both more compact specialized computers and more bulky, but much faster systems were created. An example of the former is the 1440 model, developed in 1962 for small and medium-sized businesses, and an example of the latter is the 7094 - actually a supercomputer of the early 60s, used in the aerospace industry.

Another building block towards the creation of System/360 was the creation of terminal systems. Users were assigned a separate monitor and keyboard, which were connected to one central computer. Here is the prototype of a client/server architecture paired with a multi-user operating system.

As often happens, to make the most of innovations, you have to take all previous developments, find their common ground, and then design a new system that uses the best aspects of new technologies. The IBM System/360, introduced in 1964, became just such a computer.

It is somewhat reminiscent of modern computers, which can be updated if necessary and to which various external devices can be connected. A new range of 40 peripheral devices was developed for System/360. These included IBM 2311 and IBM 2314 hard drives, IBM 2401 and 2405 tape drives, punched card equipment, OCR devices, and various communications interfaces.

Another important innovation is unlimited virtual space. Before System/360, things like this cost a pretty penny. Of course, this innovation required some reprogramming, but the result was worth it.

Above we wrote about specialized computers for science and business. Agree, this is somewhat inconvenient for both the user and the developer. System/360 became a universal system that could be used for most tasks. Moreover, a much larger number of people could now use it - simultaneous connection of up to 248 terminals was supported.

The creation of the IBM System/360 was not such a cheap undertaking. The computer was only designed for three quarters, on which about a billion dollars were spent. Another $4.5 billion was spent on investing in factories and new equipment for them. In total, five factories were opened and 60 thousand employees were hired. Thomas Watson Jr., who succeeded his father as president in 1956, called the project "the most expensive private commercial project in history."

The 70s and the IBM System/370 era

The next decade in IBM's history was not as revolutionary, but several important events took place. The 70s opened with the release of System/370. After several modifications to System/360, this system became a more complex and major redesign of the original mainframe.

The most important innovation of System/370 is support for virtual memory, that is, in fact, it is an expansion of RAM at the expense of permanent memory. Today this principle is actively used in modern operating systems of the Windows and Unix families. However, in the first versions of System/370 its support was not included. IBM made virtual memory widely available in 1972 with the introduction of the System/370 Advanced Function.

Of course, the list of innovations does not end there. The System/370 series of mainframes supported 31-bit addressing instead of 24-bit. By default, dual-processor support was supported, and there was also compatibility with 128-bit fractional arithmetic. Another important “feature” of System/370 is full backward compatibility with System/360. Software of course.

The company's next mainframe was the System/390 (or S/390), introduced in 1990. It was a 32-bit system, although it retained compatibility with 24-bit System/360 and 31-bit System/370 addressing. In 1994, it became possible to combine several System/390 mainframes into one cluster. This technology is called Parallel Sysplex.

After System/390, IBM introduced z/Architecture. Its main innovation is support for a 64-bit address space. At the same time, new mainframes were released with a larger number of processors (first 32, then 54). The appearance of z/Architecture occurred in 2000, that is, this development is completely new. Today, System z9 and System z10 are available within its framework, which continue to enjoy steady popularity. And what's more, they continue to be backwards compatible with System/360 and later mainframes, which is a record of its own.

With this, we close the topic of large mainframes, which is why we talked about their history up to the present day.

Meanwhile, IBM has a brewing conflict with the authorities. It was preceded by the departure of the blue giant's main competitors from the market for large computer systems. In particular, NCR and Honeywall decided to focus on more profitable niche market segments. And System/360 turned out to be so successful that no one could compete with it. As a result, IBM actually became a monopolist in the mainframe market.

All this turned into a trial on January 19, 1969. Quite expectedly, IBM was accused of violating Section 2 of the Sherman Act, which provides liability for monopolizing, or attempting to monopolize, the market for electronic computer systems, especially systems intended for business use. The trial lasted until 1983 and ended with IBM seriously reconsidering its view of doing business.

It is possible that the antitrust proceedings influenced the Future Systems project, within which it was supposed to once again combine all the knowledge and experience from past projects (just like in the days of System / 360) and create a new type of computer that would once again surpass everything before made systems. Work on it took place between 1971 and 1975. Economic inexpediency is cited as the reason for its closure - according to analysts, it would not have fought back the way it happened with System/360. Or maybe IBM really decided to hold back its horses a little because of the ongoing litigation.

Another very important event in the computer world is attributed to the same decade, although it occurred in 1969. IBM began to sell services for the production of software and the software itself separately from the hardware component. Today this surprises few people - even the modern generation of domestic users of pirated software is accustomed to the fact that they have to pay for programs. But then multiple complaints, press criticism, and at the same time lawsuits began to rain down on the blue giant. As a result, IBM began to separately sell only application applications, while the software for controlling the operation of the computer (System Control Programming), in fact the operating system, was free.

And at the very beginning of the 80s, a certain Bill Gates from Microsoft proved that an operating system can be paid.

Time for small personal computers

Until the 80s, IBM was very active in large orders. Several times they were made by the government, several times by the military. It usually supplied its mainframes to educational and scientific institutions, as well as large corporations. It’s unlikely that anyone bought a separate System/360 or 370 cabinet for their home and a dozen storage cabinets based on magnetic tapes and hard drives already reduced in size by a couple of times compared to RAMAC 305.

The blue giant was above the needs of the average consumer, who needs much less to be completely happy than NASA or another university. This gave the basement Apple a chance to get back on its feet with its logo of Newton holding an apple, soon replaced by just a bitten apple. And Apple came up with a very simple thing - a computer for everyone. This idea was not supported by Hewlett-Packard, where it was outlined by Steve Wozniak, or other large IT companies of the time.

When IBM realized it was already too late. The world was already admiring the Apple II - the most popular and successful Apple computer in its entire history (and not the Macintosh, as many believe). But it's better late than never. It was not difficult to guess that this market is at the very beginning of its development. The result was the IBM PC (model 5150). It happened on August 12, 1981.

The most amazing thing is that this was not IBM's first personal computer. The title of the first belongs to the 5100 model, released back in 1975. It was much more compact than mainframes, had a separate monitor, data storage and keyboard. But it was intended to solve scientific problems. It was poorly suited for businessmen and simply technology lovers. And not least because of the price, which was about $20,000.

The IBM PC changed not only the world, but also the company's approach to creating computers. Before this, IBM made any computer inside and out independently, without resorting to the help of third parties. With the IBM 5150 it turned out differently. At the time, the personal computer market was divided between the Commodore PET, the Atari family of 8-bit systems, the Apple II, and the Tandy Corporation's TRS-80s. Therefore, IBM was in a hurry not to miss the moment.

A group of 12 people, working in the Florida city of Boca Raton under the leadership of Don Estrige, was assigned to work on Project Chess (literally “Project Chess”). They completed the task in about a year. One of their key decisions was to use third-party developments. This simultaneously saved a lot of money and time on our own scientific personnel.

Initially, Don chose the IBM 801 as a processor and an operating system specially developed for it. But a little earlier, the blue giant launched the Datamaster microcomputer (full name System/23 Datamaster or IBM 5322), which was based on the Intel 8085 processor (a slightly simplified modification of the Intel 8088). This was precisely the reason for choosing the Intel 8088 processor for the first IBM PC. The IBM PC even had expansion slots that matched those of the Datamaster. Well, the Intel 8088 required a new DOS operating system, very timely proposed by a small company from Redmond called Microsoft. They did not make a new design for the monitor and printer. The first one was a monitor previously created by the Japanese division of IBM, and the printing device was an Epson printer.

The IBM PC was sold in various configurations. The most expensive one cost $3005. It was equipped with an Intel 8088 processor operating at 4.77 MHz, which, if desired, could be supplemented with an Intel 8087 coprocessor, which made floating point calculations possible. The amount of RAM was 64 KB. 5.25-inch floppy drives were supposed to be used as a device for permanent data storage. There could be one or two of them installed. Later, IBM began supplying models that allowed the connection of cassette storage media.

It was impossible to install a hard drive in the IBM 5150 due to insufficient power of the power supply. However, the company has a so-called "expansion module" or Expansion Unit (also known as the IBM 5161 Expansion Chassis) with a 10 MB hard drive. It required a separate power source. In addition, it was possible to install a second HDD in it. It also had 5 expansion slots, while the computer itself had 8 more. But to connect the Expansion Unit, it was necessary to use Extender Card and Receiver Card, which were installed in the module and in the case, respectively. Other computer expansion slots were usually occupied by a video card, cards with I/O ports, etc. It was possible to increase the amount of RAM to 256 KB.

"Home" IBM PC

The cheapest configuration cost $1,565. Along with it, the buyer received the same processor, but there was only 16 KB of RAM. The computer did not come with a floppy drive, and there was no standard CGA monitor. But there was an adapter for cassette drives and a video card designed for connecting to a TV. Thus, the expensive modification of the IBM PC was created for business (where, by the way, it became quite widespread), and the cheaper one was created for the home.

But there was one more innovation in the IBM PC - the basic input/output system or BIOS (Basic Input/Output System). It is still used in modern computers, albeit in slightly modified form. The newest motherboards already contain new EFI firmware or even simplified versions of Linux, but it will definitely be a few years before the BIOS disappears.

The IBM PC architecture was made open and publicly available. Any manufacturer could make peripherals and software for the IBM computer without purchasing any license. At the same time, the blue giant sold the IBM PC Technical Reference Manual, which contained the complete BIOS source code. As a result, a year later the world saw the first “IBM PC compatible” computers from Columbia Data Products. Compaq and other companies followed. The ice has broken.

IBM Personal Computer XT

In 1983, when the entire USSR celebrated International Women's Day, IBM released its next "male" product - the IBM Personal Computer XT (short for eXtended Technology) or IBM 5160. The new product replaced the original IBM PC, introduced two years earlier. It represented the evolutionary development of personal computers. The processor was still the same, but the basic configuration already had 128 KB of RAM, and later 256 KB. The maximum volume has increased to 640 KB.

The XT came with one 5.25-inch drive, a 10MB Seagate ST-412 hard drive, and a 130W power supply. Later, models with a 20 MB hard drive appeared. Well, PC-DOS 2.0 was used as the base OS. To expand functionality, the then new 16-bit ISA bus was used.

IBM Personal Computer/AT

Many old-timers in the computer world probably remember the AT case standard. They were used until the end of the last century. And it all started again with IBM and its IBM Personal Computer/AT or model 5170. AT stands for Advanced Technology. The new system represented the second generation of personal computers of the blue giant.

The most important innovation of the new product was the use of an Intel 80286 processor with a frequency of 6 and then 8 MHz. It was associated with many new computer capabilities. In particular, this was a complete transition to a 16-bit bus and support for 24-bit addressing, which made it possible to increase the amount of RAM to 16 MB. A battery appeared on the motherboard to power the CMOS chip with a capacity of 50 bytes. It wasn't there before either.

For data storage, 5.25-inch drives were now used with support for floppy disks with a capacity of 1.2 MB, while the previous generation provided a capacity of no more than 360 KB. The hard drive now had a permanent capacity of 20 MB, and at the same time was twice as fast as the previous model. The monochrome video card and monitors were replaced with adapters that support the EGA standard, capable of displaying up to 16 colors in a resolution of 640x350. Optionally, for professional work with graphics, it was possible to order a PGC video card (Professional Graphics Controller), costing $4,290, capable of displaying up to 256 colors on a screen with a resolution of 640x480, and at the same time supporting 2D and 3D acceleration for CAD applications.

To support all this variety of innovations, the operating system had to be seriously modified, which was released under the name PC-DOS 3.0.

Not yet a ThinkPad, no longer an IBM PC

We believe that many people know that the first portable computer in 1981 was the Osborne 1, developed by Osborne Computer Corporation. It was such a suitcase weighing 10.7 kg and costing $1795. The idea of such a device was not unique - its first prototype was developed back in 1976 at the Xerox PARC research center. However, by the mid-80s, sales of "Osbournes" came to naught.